Memory Graph Connectivity for AI Agents

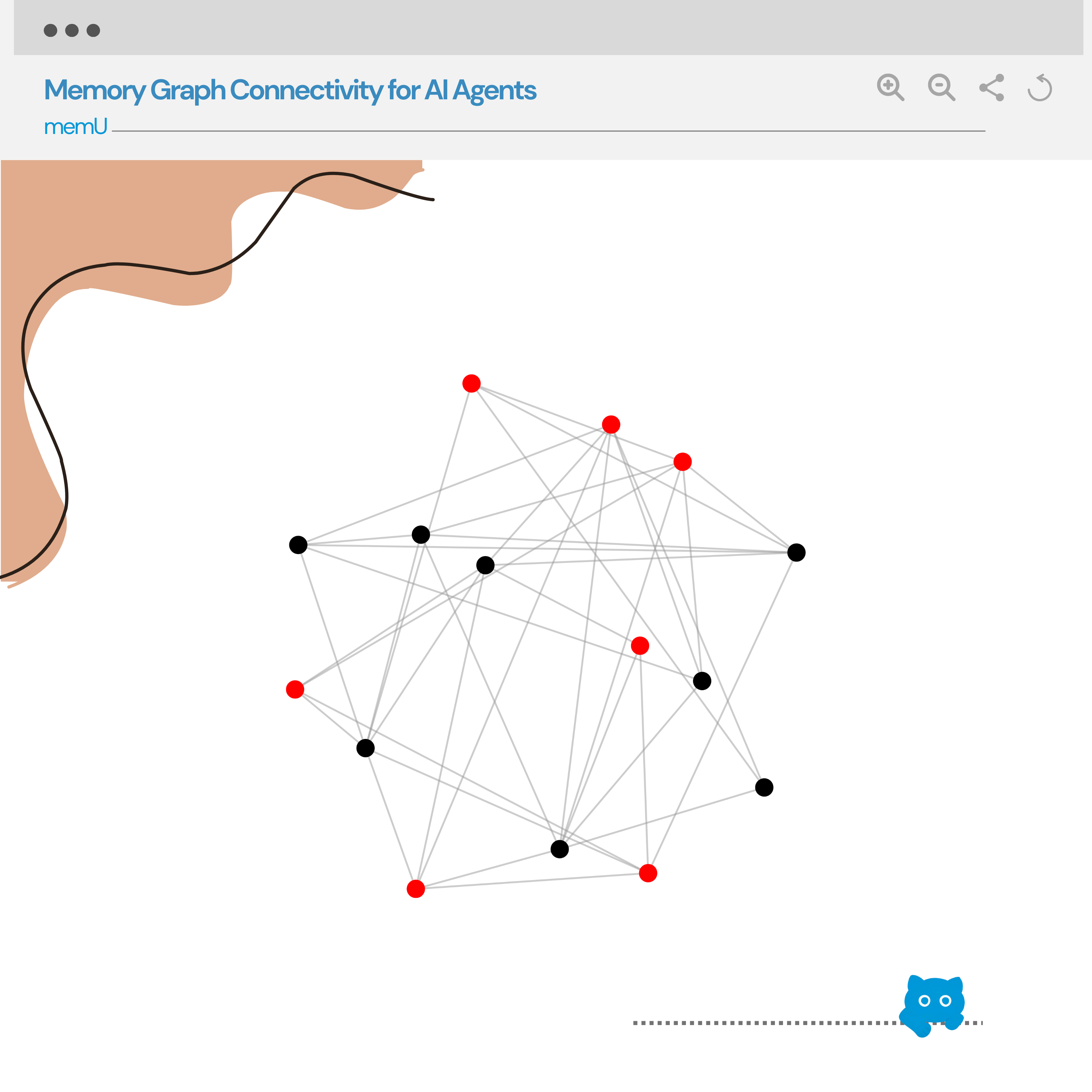

Transform Isolated Memory Items into an Interconnected Knowledge Network

memU enriches AI memory by linking related memory items and clustering them into topic- and time-aware subgraphs. This allows your AI agents to traverse, retrieve, and reason across connected memories efficiently.

Core Memory Graph Connectivity Features

How Memory Graph Connectivity Works

How AI Agents Use Memory Graphs

Why Memory Graph Connectivity Matters

Ready to Unlock the Full Potential of AI Memory?

Store complete historical data from your AI system, preserving full context across conversations, logs, and multi-modal inputs for reliable retrieval and analysis.

Explore Memory StorageManage and store individual memory entries for your AI, making each piece of data instantly accessible.

Explore Memory ItemOrganize memories into categories for better retrieval, context management, and structured learning.

Explore Memory CategoryAccess relevant memories instantly using LLM-based semantic reading or RAG-based vector search.

Explore Memory RetrievalTransform isolated memory items into an interconnected knowledge network.

Now HereAI memories automatically adapt and evolve over time, improving performance without manual intervention.

Explore Self‑evolvingStore and recall text, images, audio, and video seamlessly within a single AI memory system.

Explore Multimodal MemoryEnable multiple AI agents to share and coordinate memories, enhancing collaboration and collective intelligence.

Explore Multi‑agentWith memU's agentic architecture, you can build AI applications that truly remember their users through autonomous memory management.

Explore Agentic MemoryTreat memory like files — readable, structured, and persistently useful.

Explore File Based MemoryHow to Save Your AI Agent’s Memories with memU

Use the memU cloud platform to quickly store and manage AI memories without any setup, giving you immediate access to the full range of features.

Try the Cloud PlatformDownload the open-source version and deploy it yourself, giving you full control over your AI memory storage on local or private servers.

Get it on GitHubIf you want a hassle-free experience or need advanced memory features, reach out to our team for custom support and services.

Contact UsFAQ

Agent memory (also known as agentic memory) is an advanced AI memory system where autonomous agents intelligently manage, organize, and evolve memory structures. It enables AI applications to autonomously store, retrieve, and manage information with higher accuracy and faster retrieval than traditional memory systems.

MemU improves AI memory performance through three key capabilities: higher accuracy via intelligent memory organization, faster retrieval through optimized indexing and caching, and lower cost by reducing redundant storage and API calls.

Agentic memory offers autonomous memory management, automatic organization and linking of related information, continuous evolution and optimization, contextual retrieval, and reduced human intervention compared to traditional static memory systems.

Yes, MemU is an open-source agent memory framework. You can self-host it, contribute to the project, and integrate it into your LLM applications. We also offer a cloud version for easier deployment.

Agent memory can be used in various LLM applications including AI assistants, chatbots, conversational AI, AI companions, customer support bots, AI tutors, and any application that requires contextual memory and personalization.

While vector databases provide semantic search capabilities, agent memory goes beyond by autonomously managing memory lifecycle, organizing information into interconnected knowledge graphs, and evolving memory structures over time based on usage patterns and relevance.

Yes, MemU integrates seamlessly with popular LLM frameworks including LangChain, LangGraph, CrewAI, OpenAI, Anthropic, and more. Our SDK provides simple APIs for memory operations across different platforms.

MemU offers autonomous memory organization, intelligent memory linking, continuous memory evolution, contextual retrieval, multi-modal memory support, real-time synchronization, and extensive integration options with LLM frameworks.

Start Building Smarter AI Agents Today

Give your AI the power to remember everything that matters and unlock its full potential with memU. Don’t wait — start creating smarter, more capable AI agents now.